Introducing Nexa AI: Empowering On-Device AI Deployment

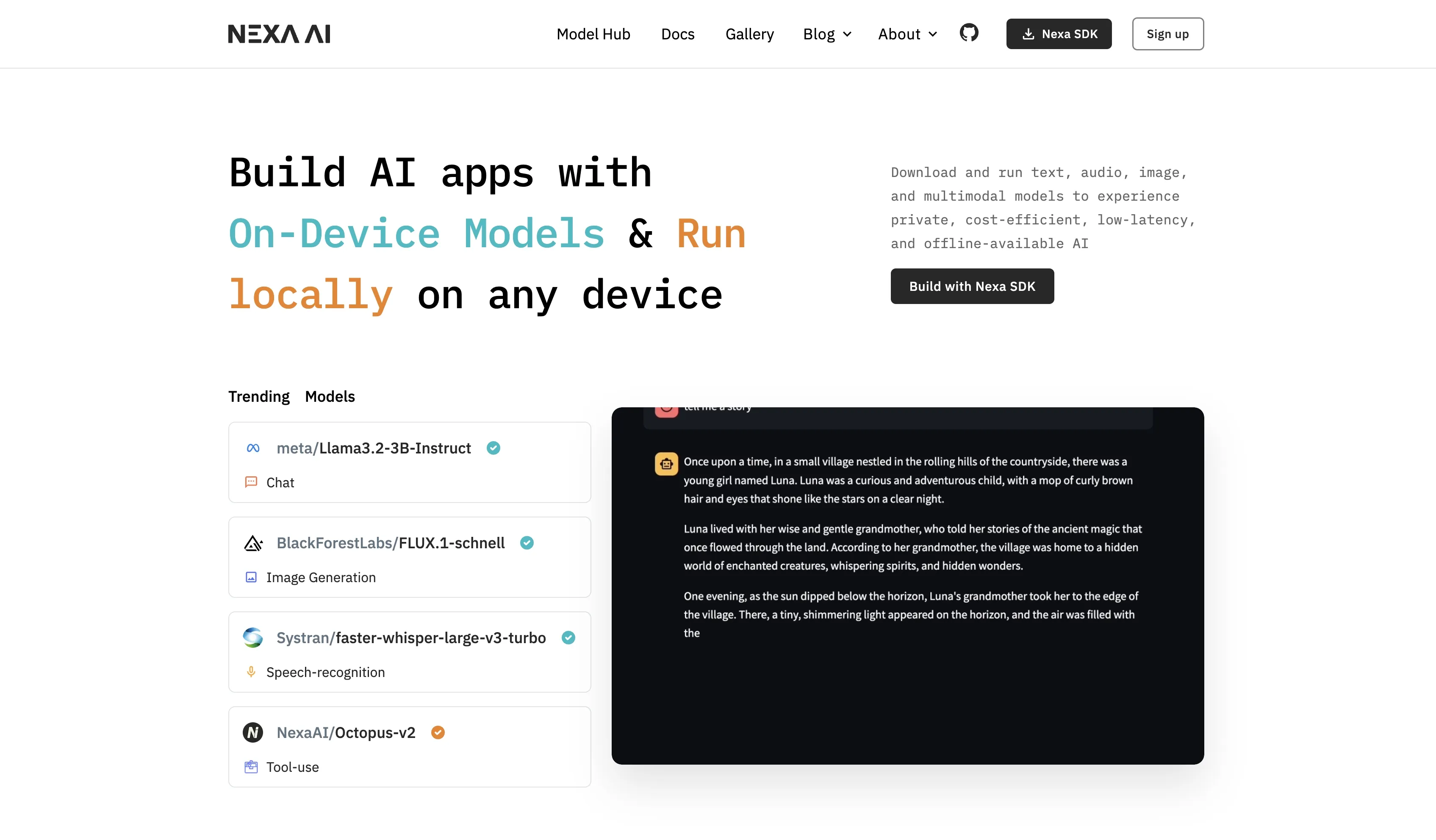

Nexa AI is a cutting-edge open-source platform that specializes in enabling on-device AI deployment. It focuses on delivering compact, high-performance multimodal models that can efficiently operate directly on edge devices, ensuring unparalleled efficiency and privacy.

Key Features:

- Tiny Multimodal Models: Compressed AI models tailored for text, vision, and audio processing.

- Multi-Device Support: Compatible with CPU, GPU, and NPU on various devices such as PCs, mobile phones, and wearables.

- Local Inference Framework: Supports ONNX and GGML model architectures for seamless on-device processing.

- Privacy-First Design: Ensures complete on-device data processing without relying on cloud services.

- OpenAI-Compatible Server: Facilitates function calling and streaming functionalities for enhanced user experience.

Use Cases:

- Enterprise AI Agents

- Personal AI Assistants

- Edge Computing Solutions

- Workflow Automation

- Private Document Intelligence

- Multimodal AI Applications

Technical Specifications:

- Model Sizes: Ranging from sub-1B to 3B parameters.

- Supported Modalities: Text, Vision, Audio.

- Deployment Platforms: Windows, macOS, Linux, Android, iOS.

- Inference Engines: CUDA, Metal, ROCm, Vulkan.

- Compression Techniques: Including Quantization and Token Reduction for optimized performance.